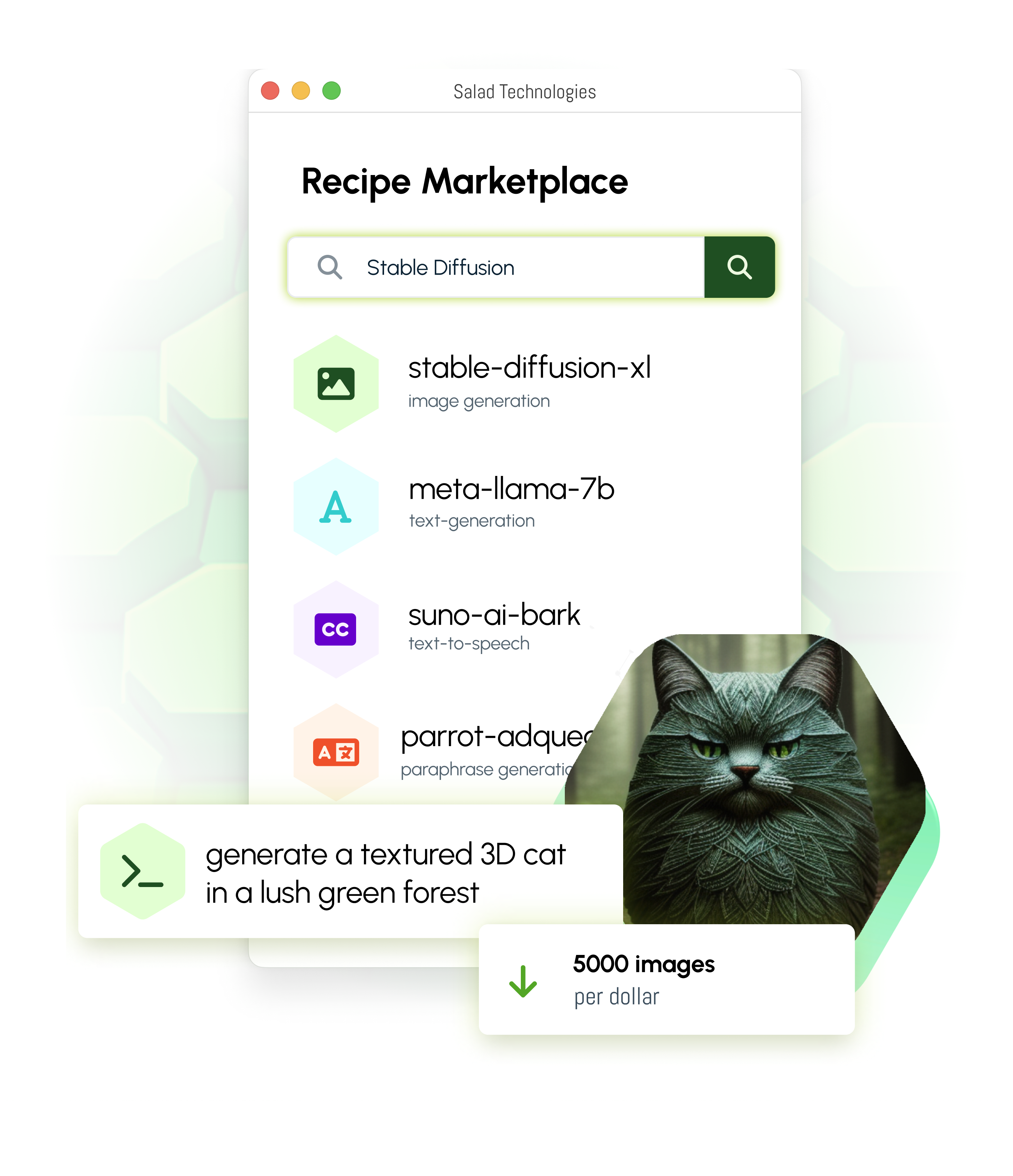

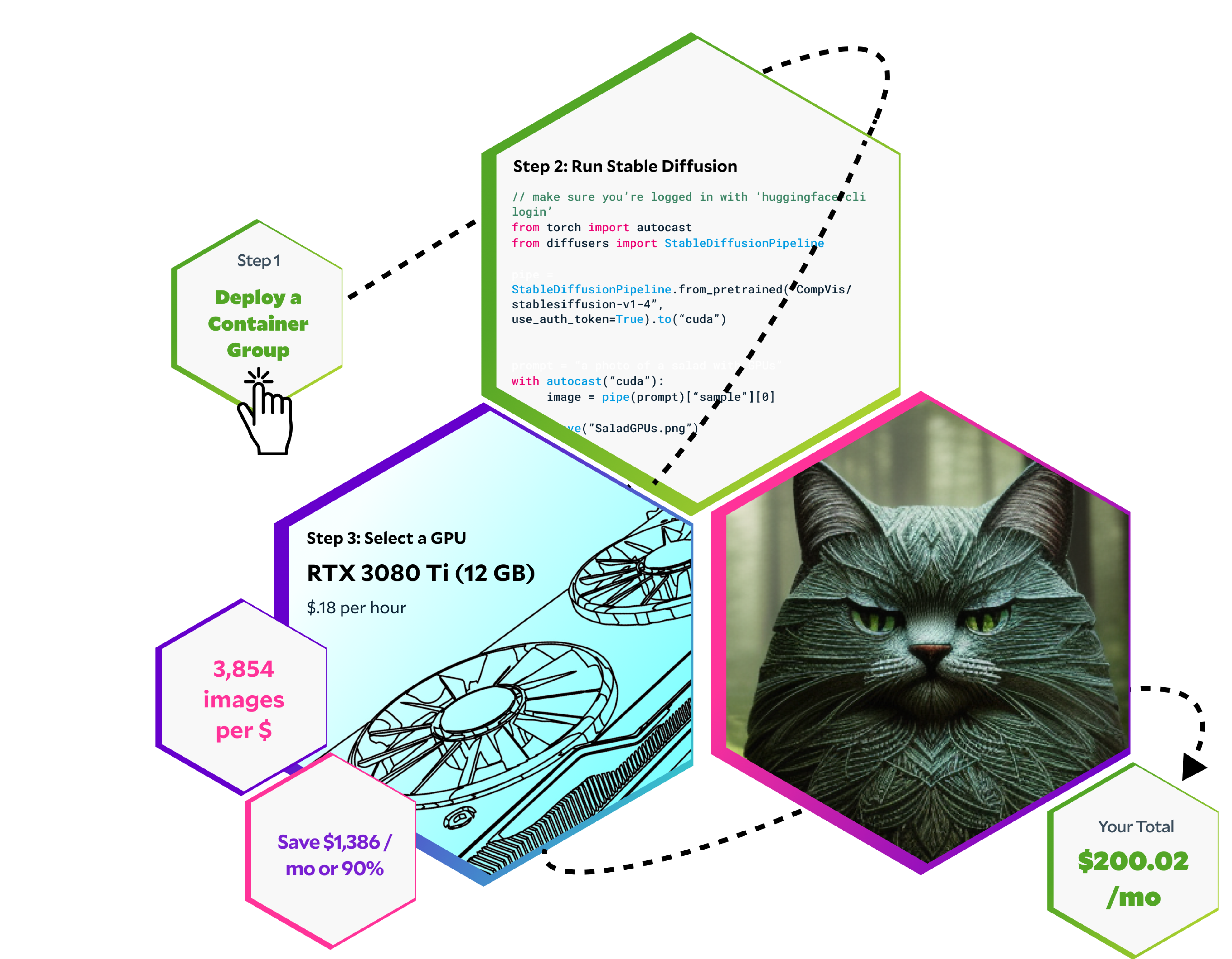

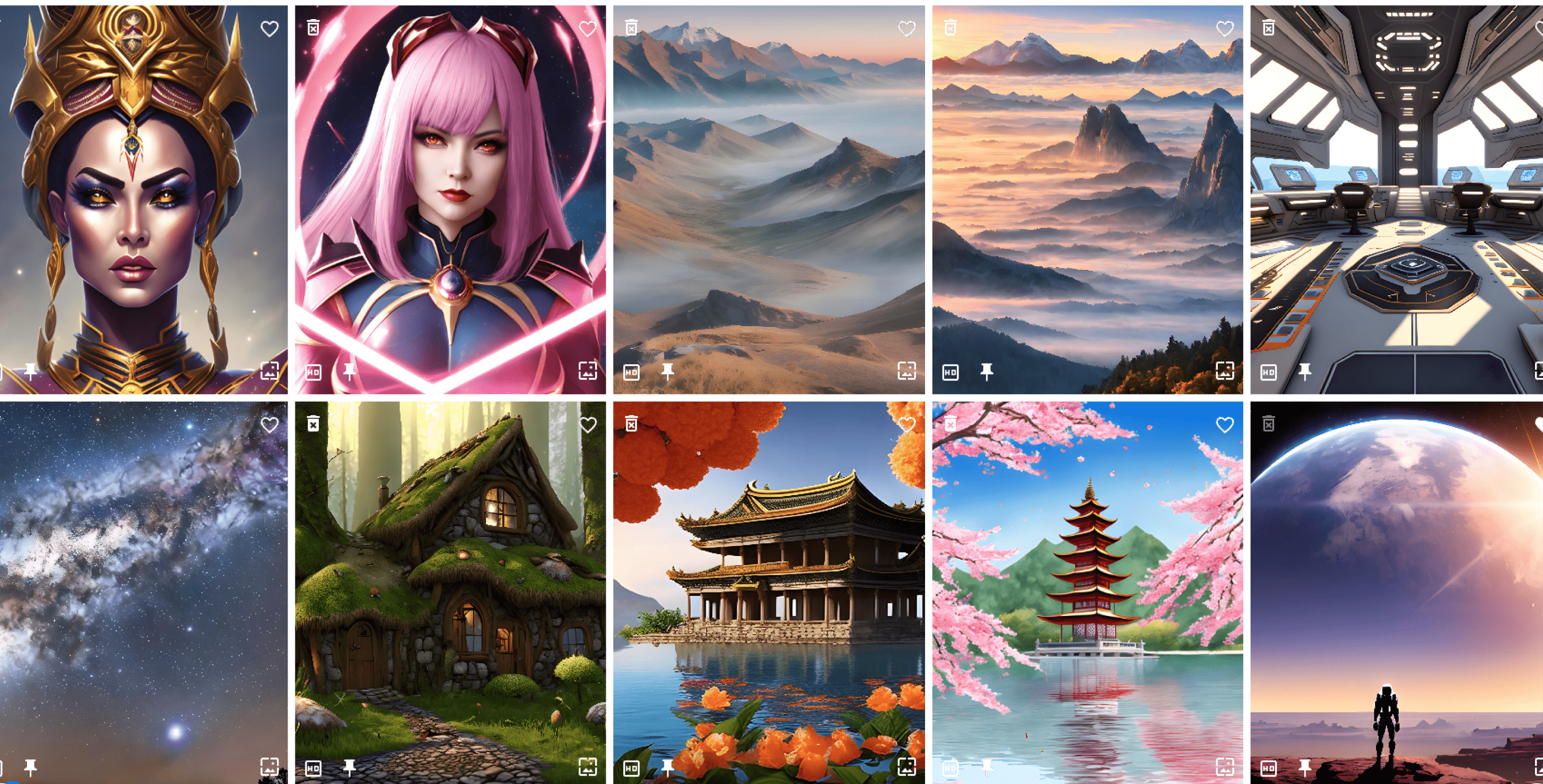

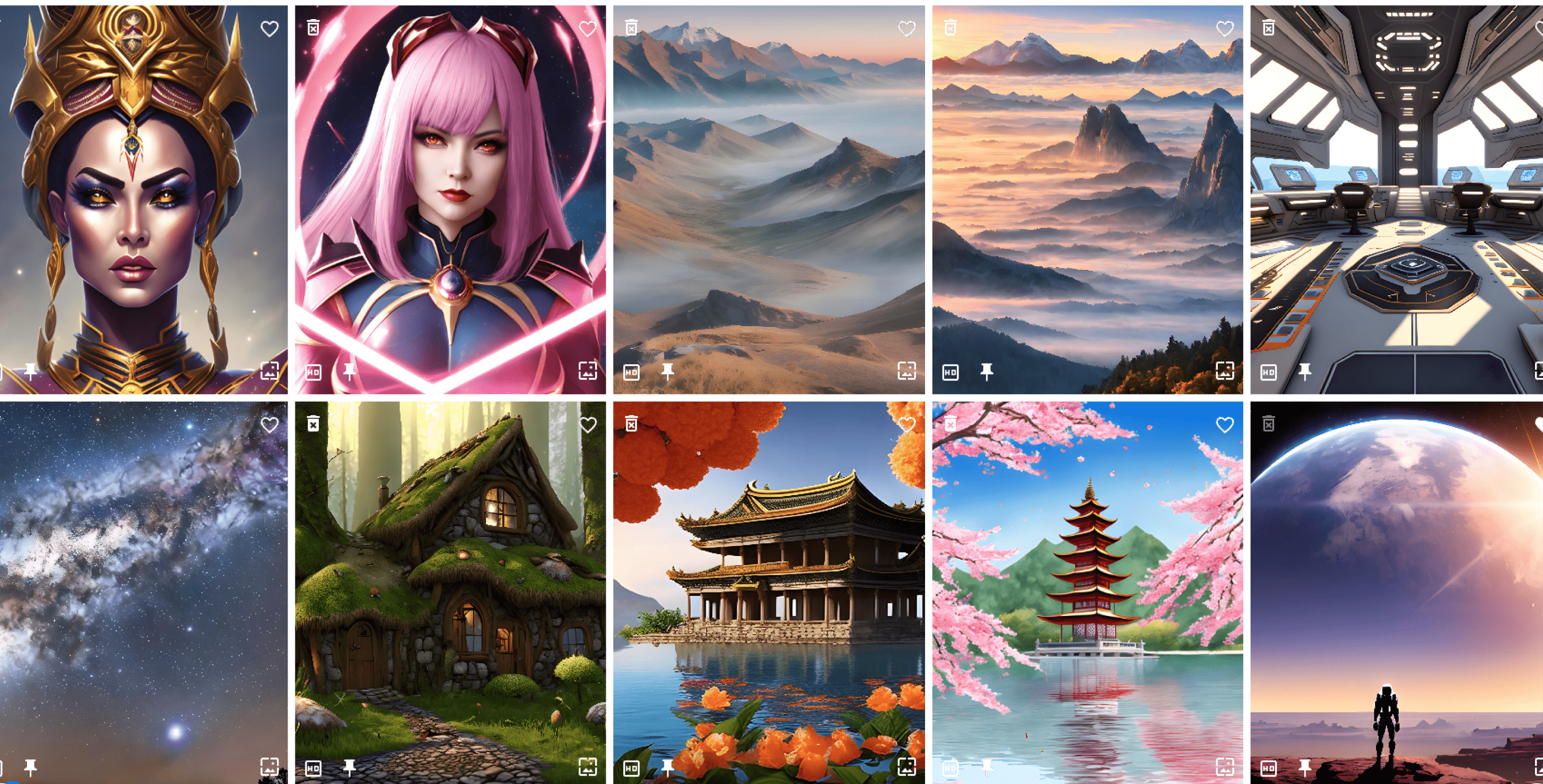

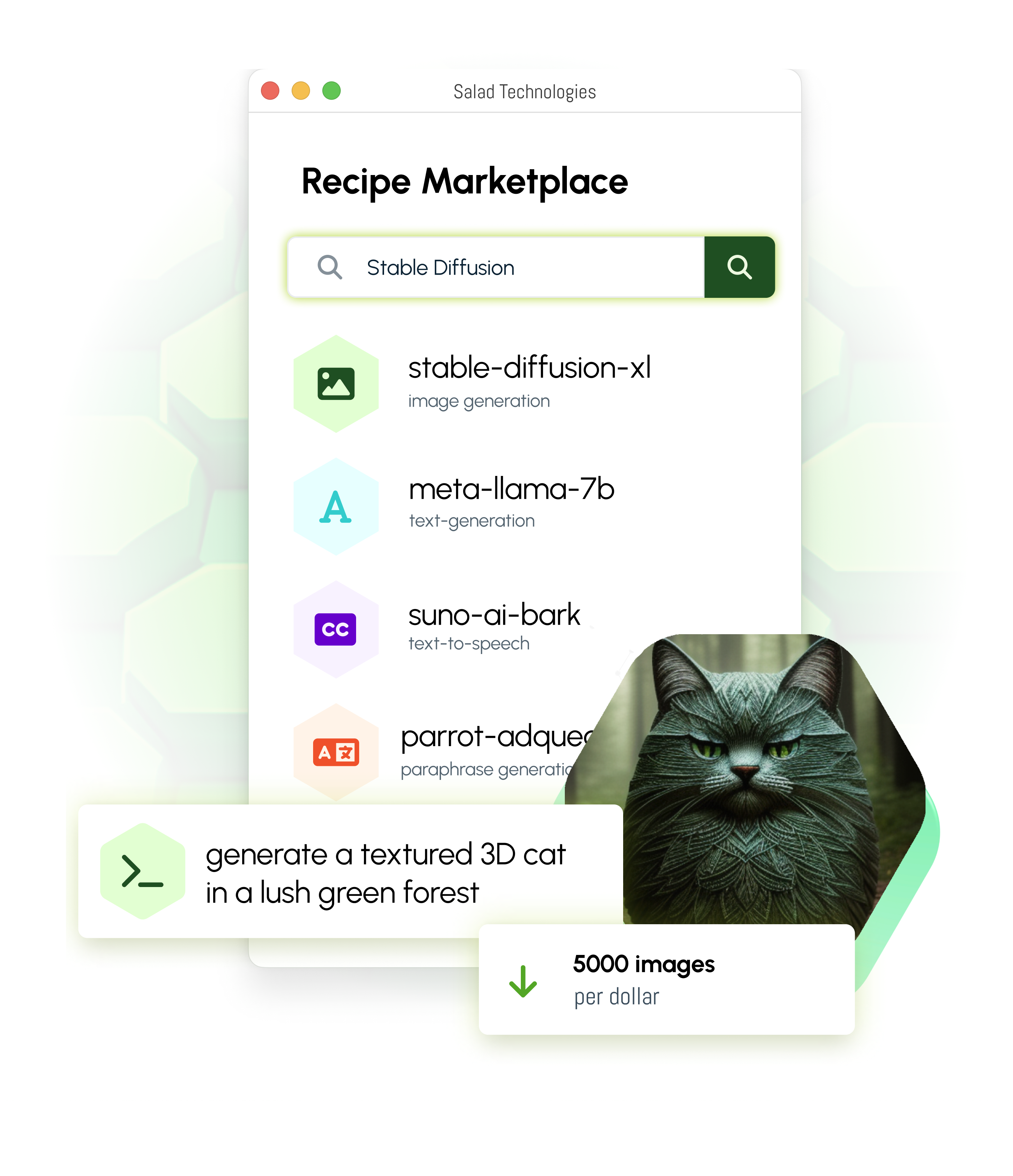

Text-to-Image

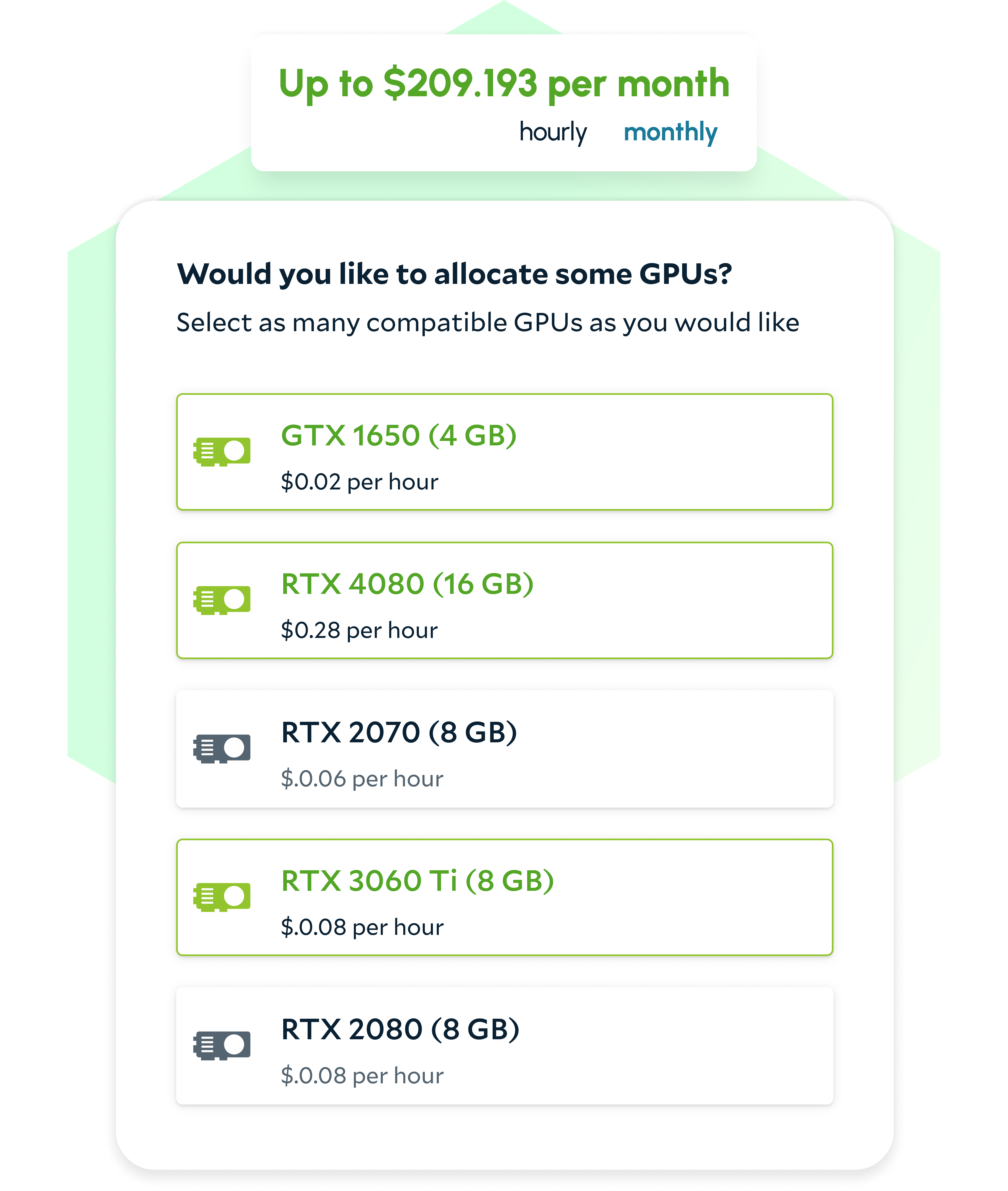

Scale easily to thousands of GPU instances worldwide without the need to manage VMs or individual instances, all with a simple usage-based price structure.

Deploy AI/ML production models without headaches on the lowest priced consumer GPUs (from $0.02/hr).

Save up to 90% on compute cost compared to expensive high-end GPUs, APIs and hyperscalers.

Have questions about enterprise pricing for SaladCloud?

Consumer GPUs like the RTX 4090 offer better cost-performance for many use cases like AI inference & batch jobs. SaladCloud offers a fully-managed container service opening up access to thousands of AI-enabled consumer GPUs on the world’s largest distributed network.

Lowest GPU prices. Incredible scalability. 85% less cost than hyperscalers.

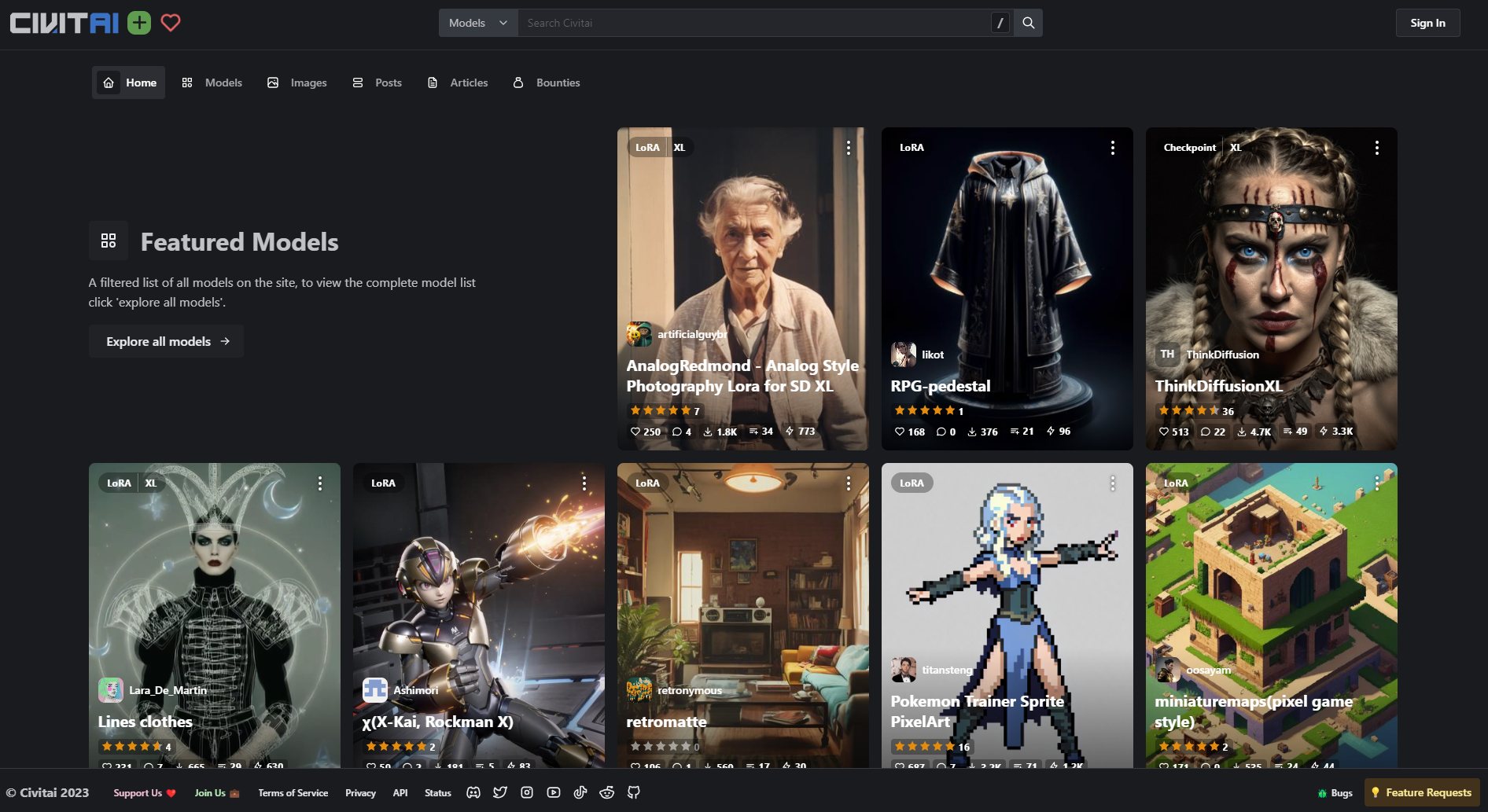

“By switching to Salad, Civitai is now serving inference on over 600 consumer GPUs to deliver 10 Million images per day and training more than 15,000 LoRAs per month. Salad not only had the lowest GPU prices in the market but also offered us incredible scalability."

“On Salad’s consumer GPUs, we are running 3X more scale at half the cost of A100s on our local provider and almost 85% less cost than the two major hyperscalers we were using before.I’m not losing sleep over scaling issues anymore.”

“Salad makes it more realistic to keep up with deploying these new models. We might never deploy most of them if we had to pay AWS cost for them.”

“Salad makes it more realistic to keep up with deploying these new models. We might never deploy most of them if we had to pay AWS cost for them.”

Scale easily to thousands of GPU instances worldwide without the need to manage VMs or individual instances, all with a simple usage-based price structure.

Save up to 50% on orchestration services from big box providers, plus discounts on recurring plans.

Distribute data batch jobs, HPC workloads, and rendering queues to thousands of 3D accelerated GPUS.

Bring workloads to the brink on low-latency edge nodes located in nearly every corner on the planet.

Deploy Salad Container Engine workloads alongside your existing hybrid or multicloud configurations.

Distribute data batch jobs, HPC workloads, and rendering queues to thousands of 3D accelerated GPUS.

Bring workloads to the brink on low-latency edge nodes located in nearly every corner on the planet.

Scale easily to thousands of GPU instances worldwide without the need to manage VMs or individual instances, all with a simple usage-based price structure.

Scale easily to thousands of GPU instances worldwide without the need to manage VMs or individual instances, all with a simple usage-based price structure.

You are overpaying for managed services and APIs. Serve TTS inference on Salad's consumer GPUs and get 10X-2000X more inferences per dollar.

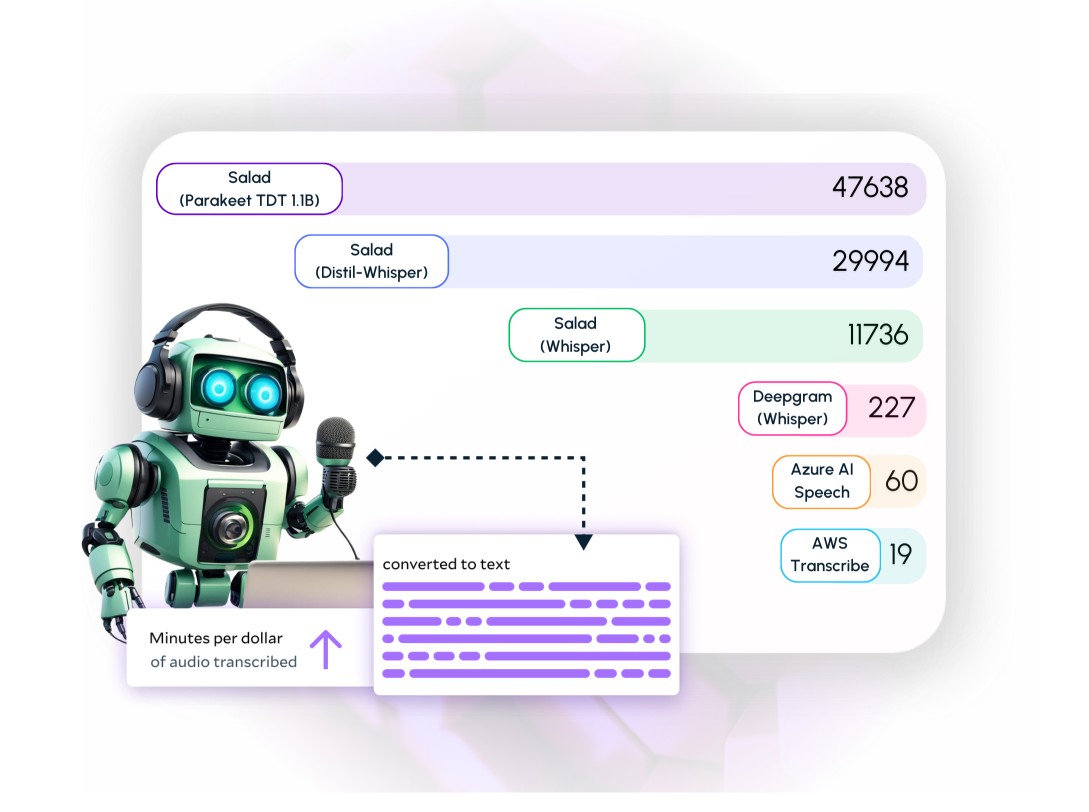

If you are serving AI transcription, translation, captioning, etc. at scale, you are overpaying by thousands of dollars today. Serve speech-to-text inference on Salad for up to 90% less cost.

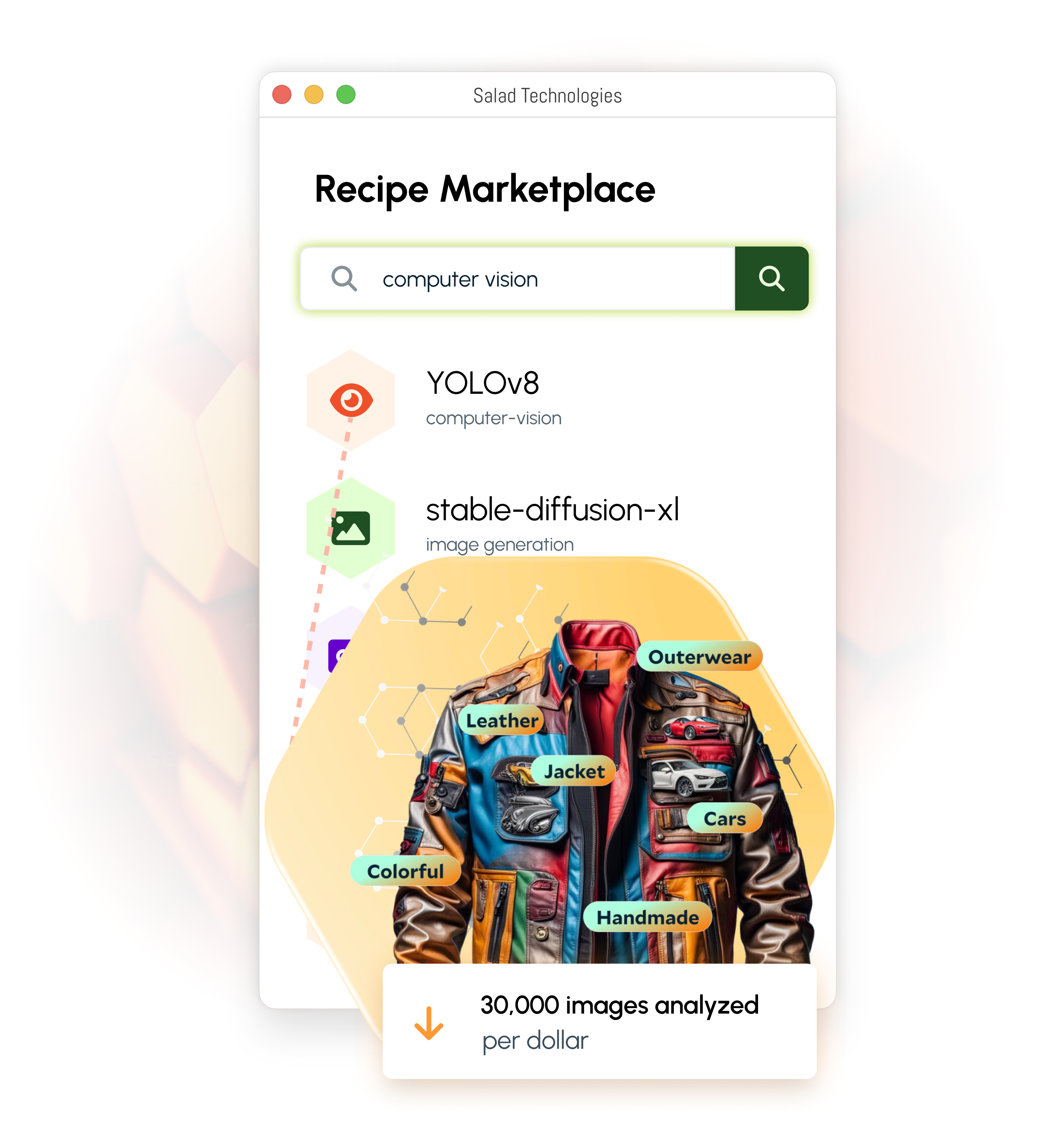

Simplify and automate the deployment of computer vision models like YOLOv8 on 10,000+ consumer GPUs on the edge. Save 50% or more on your cloud cost compared to managed services/APIs.

Running Large Language Models (LLM) on Salad is a convenient, cost-effective solution to deploy various applications without managing infrastructure or sharing compute.

We can’t print our way out of the chip shortage. Run your workloads on the edge with already available resources. Democratization of cloud computing is the key to a sustainable future, after all.

High TCO on popular clouds is a well-known secret. With SaladCloud, you just containerize your application, choose your resources and we manage the rest, lowering your TCO & getting to market quickly.

Over 1Million individual nodes and 100s of customers trust Salad with their resources and applications.

Over 1 Million individual nodes and 100s of customers trust Salad with their resources and applications.

You don’t have to manage any Virtual Machines (VMs).

No ingress/egress costs on SaladCloud. No surprises.

Save time & resources with miniminal DevOps Work.

Scale without worrying about access to GPUs.