Save up to 90% for

Voice AI inference

Whether you are serving inference for Speech-to-Text, Text-to-Speech, Chatbots or batch translating 1000s of hrs of audio, SaladCloud’s consumer GPUs can reduce your cloud cost by up to 90% compared to managed services and APIs.

Have questions about enterprise pricing for SaladCloud?

Book a 15 min call with our team.

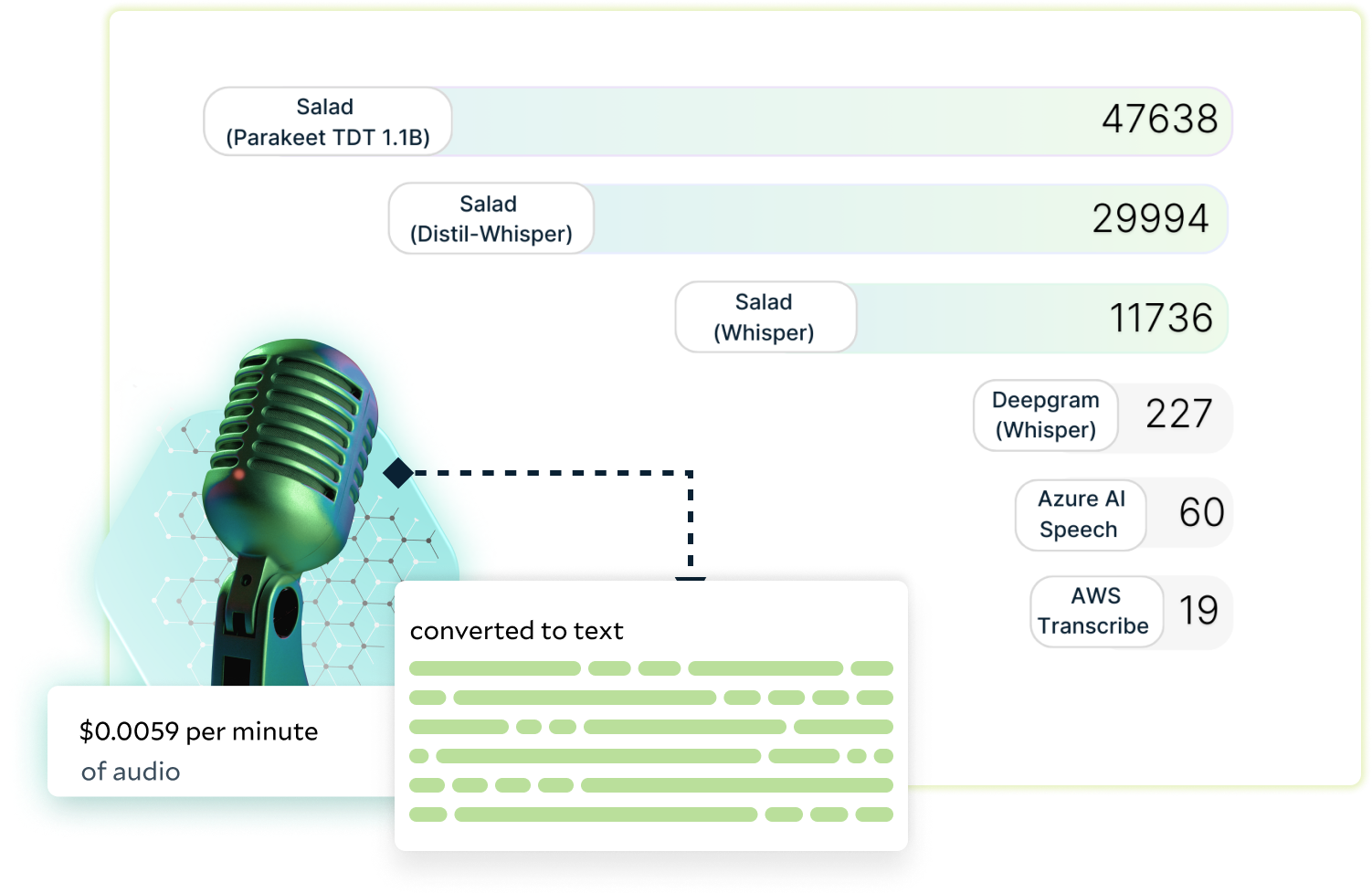

Speech-to-Text: 47,638 minutes per $1 - Parakeet TDT 1.1B

Speech-to-Text: 29,994 minutes/$ - Distil-Whisper Large V2

Text-to-Speech: 6 Million words per $1 - OpenVoice

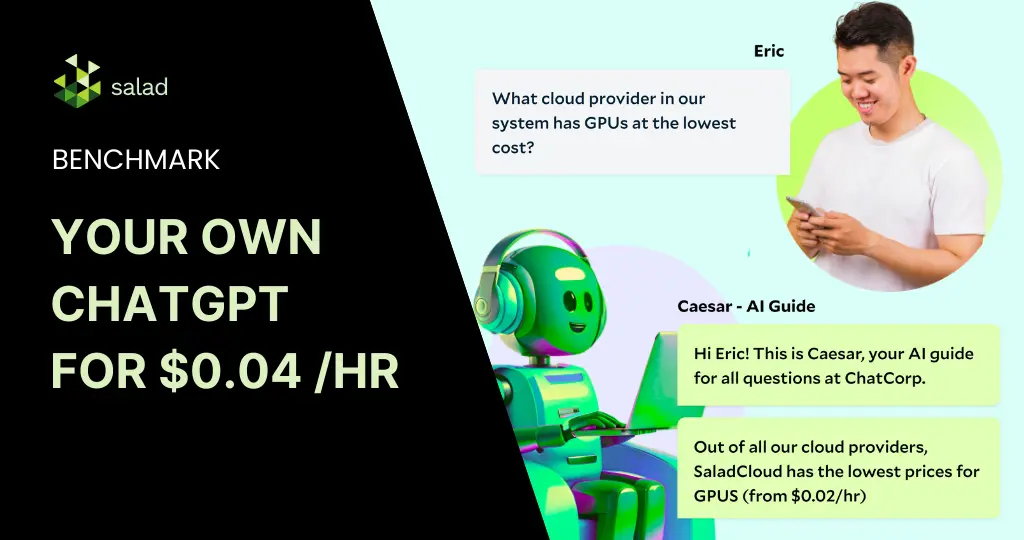

Your own ChatGPT with Ollama, ChatUI & Salad

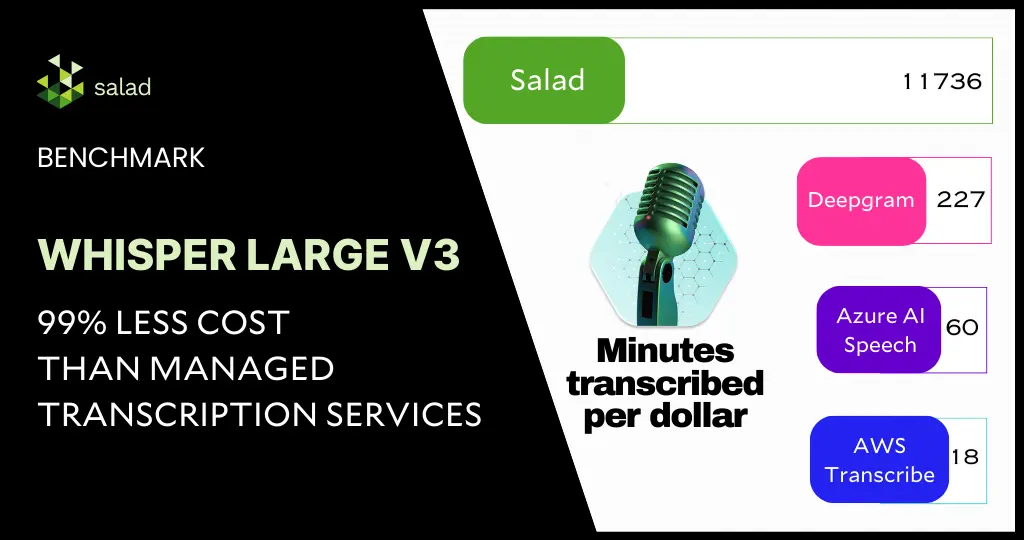

Speech-to-Text: 11,736 mins/$ - Whisper Large V3

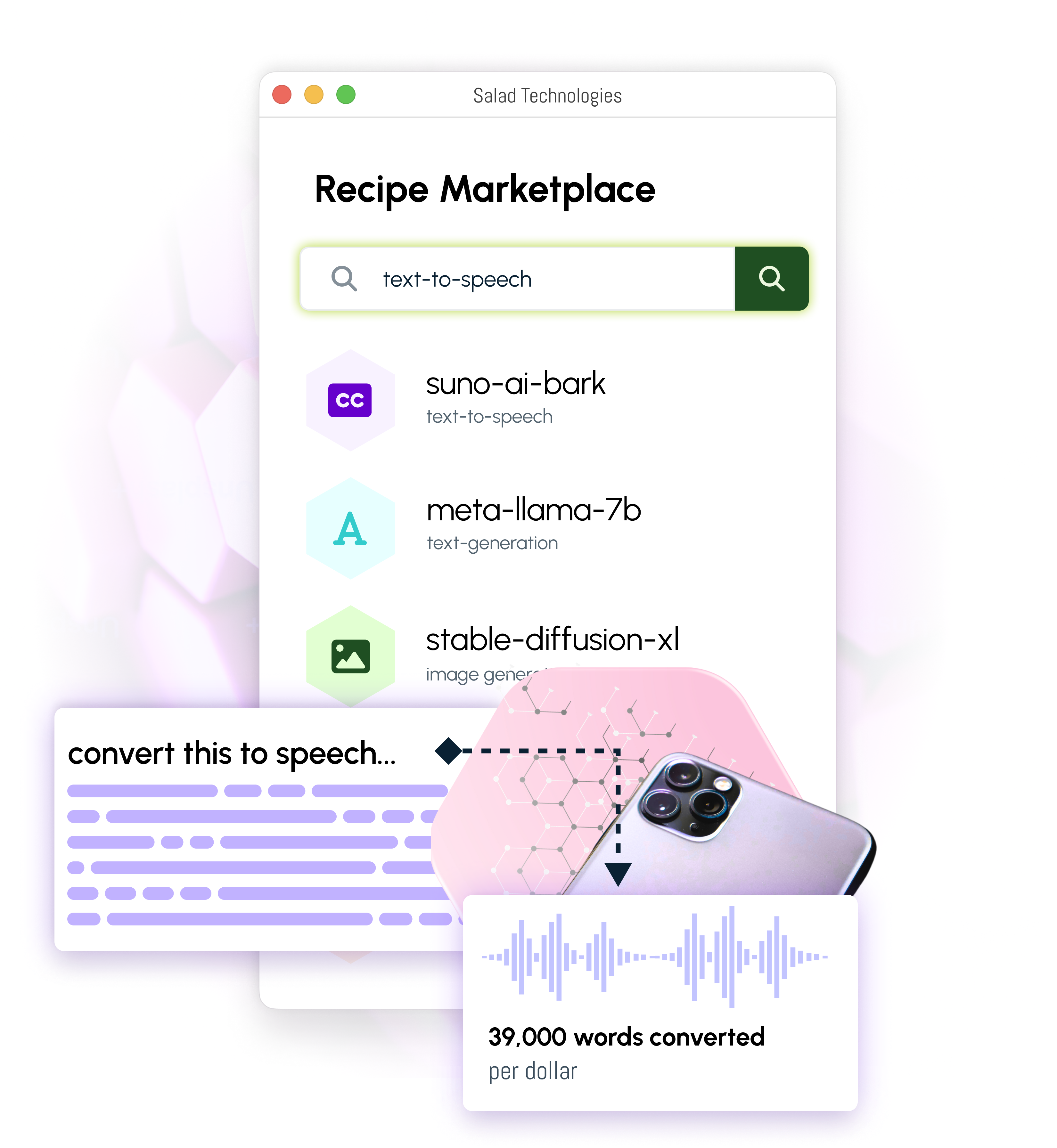

Text-to-Speech: 39,000 words per $1 - Bark

Speech-to-Text

For use cases like automatic speech recognition (ASR), translation, captioning, subtitling, etc., SaladCloud is cheaper by 90% or more compared to APIs and hyperscalers.

Text-to-Speech

Save up to 90% on Text-to-Speech (TTS) inference with SaladCloud’s consumer GPUs. The RTX & GTX series GPUs deliver the best cost-performance for TTS inference.

The Lowest Cost For Voice AI Inference

Voice AI models are perfect for consumer GPUs, giving incredible cost-performance and saving thousands of dollars compared to running on public clouds.

Scale easily to thousands of GPU instances worldwide without the need to manage VMs or individual instances, all with a simple usage-based price structure.

Transcription

Save up to 98% on transcription costs compared to public cloud with about 60X real-time speed on RTX 3090s.

Translation

Get better machine translation economics on SaladCloud's network of GPUs at the lowest market prices.

Captioning / Subtitles

Cut AI captioning/subtitle generation costs by at least 50% on SaladCloud.